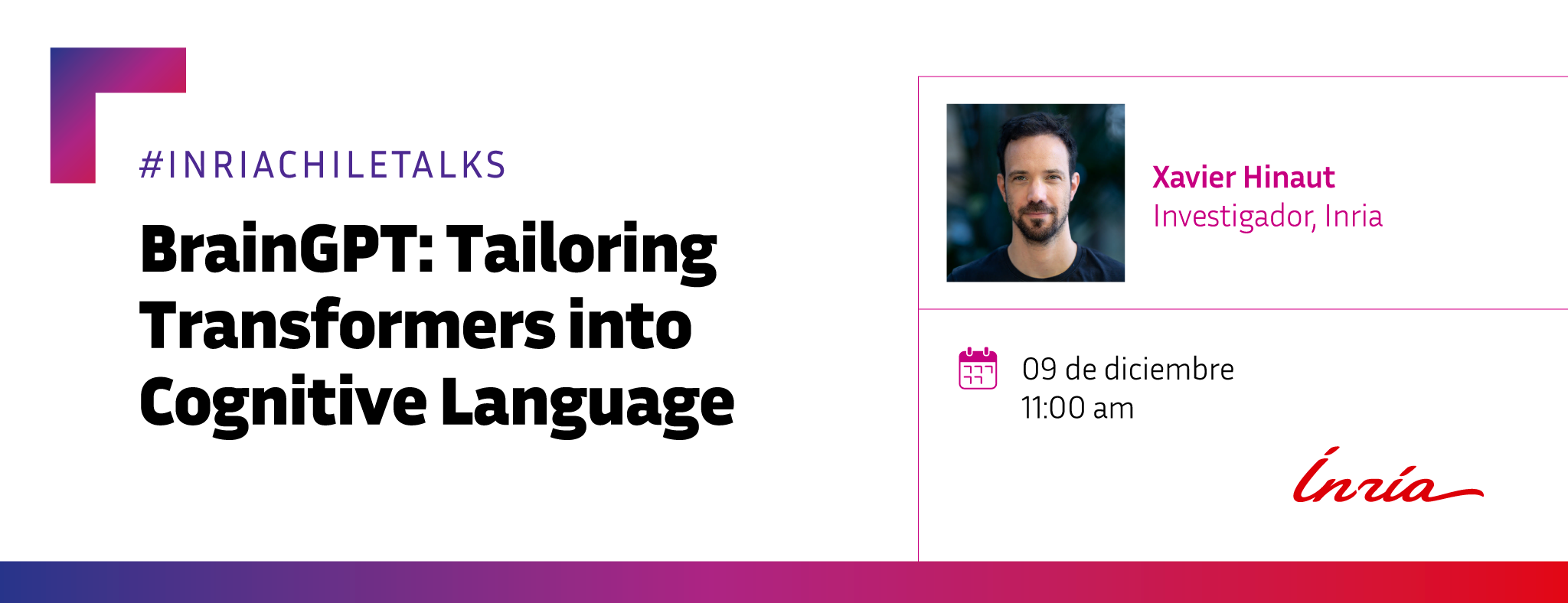

- Tuesday, December 09, 2025 - 11:00 am (Santiago, Chile time)

- Hybrid format

- The talk will be held in English

- Speaker:

- Xavier Hinaut is Research Scientist in Bio-inspired Machine Learning and Computational Neuroscience at Inria, Bordeaux, France since 2016.

Abstract

Language involves several levels of abstraction, from small sound units like phonemes to contextual sentence-level understanding. Large Language Models (LLMs) have shown an impressive ability to predict human brain recordings. For instance, while a subject is listening to a book chapter from Harry Potter, LLMs can predict parts of brain imaging activity (recorded by functional Magnetic Resonance Imaging or Electroencephalography) at the phoneme or word level. These striking results are likely due to their hierarchical architectures and massive training data. Despite these feats, they differ significantly from how our brains work and provide little insight into the brain’s language processing. We will see how simple Recurrent Neural Networks like Reservoir Computing can model language acquisition from limited and ambiguous contextual data better than LSTMs. From these results, in the BrainGPT project, we explore various architectures inspired by both reservoirs and LLMs, combining random projections and attention mechanisms to build models that can be trained faster with less data and greater biological insight.

Xavier Hinaut

Xavier Hinaut is Research Scientist in Bio-inspired Machine Learning and Computational Neuroscience at Inria, Bordeaux, France since 2016.

He received a MSc and Engineering degree form Compiègne Technology University (UTC), France, in 2008, a MSc in Cognitive Science & AI from École Pratique des Hautes Études (EPHE), France, in 2019, then his PhD of Lyon University, France, in 2013. He is Chair of IEEE CIS Task Force on Reservoir Computing. His work is at the frontier of neurosciences, machine learning, robotics and linguistics: from the modeling of human sentence processing to the analysis of birdsongs and their neural correlates. He both uses reservoirs for machine learning (e.g. birdsong classification) and models (e.g. sensorimotor models of how birds learn to sing). He manages the “DeepPool” ANR project on human sentence modeling with Deep Reservoirs architectures and the Inria Exploratory Action “BrainGPT” on Reservoir Transformers. He leads ReservoirPy development: the most up-to-date Python library for Reservoir Computing. https://github.com/reservoirpy/reservoirpy He is also involved in public outreach, notably by organising hackathons like www.hack1robo.fr from which creative projects linking AI and art came out (ReMi project on reservoir generating MIDI and sounds, now followed by the startup Allendia).